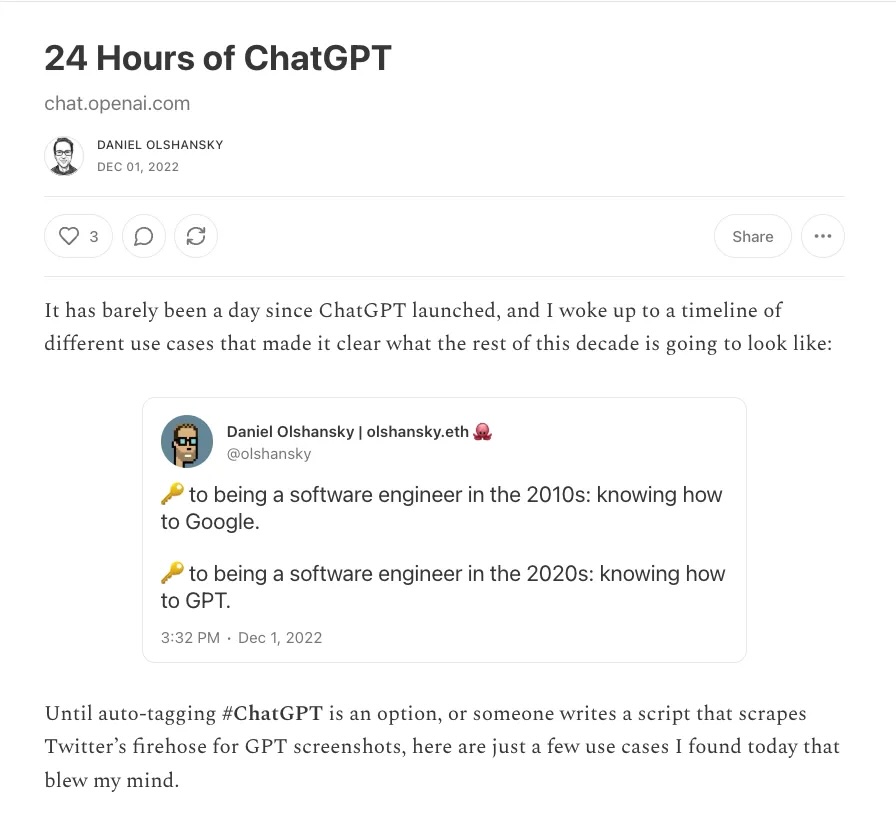

On December 1st, 2022, I published a blog post titled “24 hours of ChatGPT”.

It was one of the first times in my life when I realized how utterly wrong I could be about something.

In 2012, I vividly remember building out a prototype of a standalone Twitter DMs app as an intern the day before my 20th birthday. I read a post about how Google was able to detect cats using machine learning. That moment was exciting, but nothing like what arrived a decade later.

Between 2016 and 2022, I spent 6 years in ML evals and engineering. I saw GPT-2 experiments. I was confident we were heading into an era of ML-assisted engineering, but nowhere near the scale of what unfolded.

Three years out of the ChatGPT moment, I wanted to quickly document what I missed, what I got right, and what I think comes next. [1]

Quick Reflection

Today, I use Gemini, Codex and Claude in every part of my life. Personal, technical, creative and operational. Every day. It still feels like we are only at the start.

It is strange to remember that a ten billion dollar Microsoft investment once shocked people. We now understand the scale of what this technology is capable of. $10B sounds large, but it’s no longer jaw-dropping.

Recurring Thoughts

Here are the mantras I keep returning to in various conversations I have about AI with other people:

- Artificial General Intelligence is already here. Artificial General Intuition is not.

- AI should be thought of as an Amplification of Intelligence, not Artificial Intelligence.

- Data quality is king. Data quantity is a given. Data uniqueness is the golden goose.

Things I was wrong about 3 years ago

Audio: I did not think we would be talking to agents in a “Her”-like style interaction loop. The flow is not perfect, but it is far better than I would have ever expected.

AI Agents: I did not believe agents would automate coding to the degree they have. If I don’t have access to an AI agent, I don’t bother coding anymore because it’s not a good use of time. The delta in productivity is that large. [2]

Things I was right about 3 years ago

Fine-Tuning: Fine-tuning was overrated in 2022. It was useful for tone, but the compute and cost didn’t outperform longer context or simple retrieval. This is finally changing with reinforcement fine-tuning.

Benchmarks: Benchmarks were overrated then and still are today. They serve as an important data point, but they’re not a solution. Similar to GPAs or SAT scores, they can be gamed, trained on, are filled with many false positives, and don’t necessarily translate into useful output.

Vibe Checks: I published “Vibe Checks Are All You Need” during a time when people didn’t want to admit that they rely on intuition. There is no silver bullet. It is a mix of evals, benchmarks, vibe checks and day-to-day collaboration with the model.

Expert Labelling: The market for high-quality labelling by domain experts is critical. Several data labelling companies scaled to 9-figures in revenue, and I believe the opportunity is still out there. Humanity will need to build out “Golden Data Sets” for every industry over time.

1 Year Predictions

AI Base Stations: Local inference stations will begin to proliferate. Teams and households will run small models locally to reduce cloud costs and operate offline. It will be as normal as a local Network Attached Storage.

Shopping: 2026 will be the year of agentic e-commerce. AI will make at least 10% of my online purchases on my behalf. It is already heavily involved in almost every decision leading up to a purchase.

Consolidation: The current tooling landscape is too fragmented. Redundant products will consolidate into the incumbents. This is human capital efficiency that needs to be redirected and unlocked.

Audio: Alexa, Google Home, Siri and their peers are overdue for their renaissance. The upgrade cycle is coming.

Reinforcement Fine-Tuning: Small, niche, expert-labelled (i.e. golden) data sets will fine-tune open models in ways that produce real, measurable results.

1 Year Anti-Predictions

Traditional Industries: Over the last few months, I have met many people in space, construction, and other grounded industries who are adopting AI. Momentum is real, but the step-function change will not happen within the next year.

Energy Issues: Everyone is talking about energy requirements, and now is the time to start preparing. However, I do not expect enterprises or consumers to hit hard limits anytime soon.

Synthetic Data: This is important, and it will be helpful, but it will not be transformative.

5 Year Predictions

Software Engineers: Writing code by hand will feel like low-level memory management. It will still exist, but it will be a specialized slice of the industry, not part of its core.

World Models: We will see new frontier labs built around world models. They will not produce a ChatGPT-scale economic shock, but they will unlock agentic robotics.

Generational Adoption: As the first AI-native generation enters fields like medicine, we will start seeing real day-to-day adoption by practitioners.

Context Engineering: Every serious company will have a dedicated team for context engineering. This will become a real discipline in itself.

AI BootCamps: Coding bootcamps are out and AI bootcamps are in. AI bootcamps will train people to understand the fundamentals, build intuition, prepare data pipeline, design evals, deploy systems, etc. Companies will grow this talent in house rather than leveraging 3rd party companies with forward-deployed engineers.

5 Year Anti-Predictions

Software Architects: Architects will still be necessary. They will guide, review and design large systems. AI will not displace this in the next five years.

Breakthroughs: The foundational breakthroughs in AI happened in the last decade. The foundational breakthroughs in math, physics, construction, and energy all happened in the last 50-100 years. Engineering, refinement, scale and integration are all we have to look forward to from here on forward.

Venting: AI will still be a poor outlet for emotional venting.

Data: Enterprises will continue to struggle to organize and use their data effectively.

Intuition: Artificial intuition will still be a research problem.

Super Intelligence: I do not expect superintelligence in this window.

Safety: Dangerous individuals misusing AI will remain a greater risk than AI itself.

Audio: Always-on audio will remain niche. Limitless-style products were a bigger flop than I expected.

[1] There is no cost to making public predictions. Definitions like intuition or quality or superintelligence deserve entire essays of their own. I am skipping the qualifiers.

I’m very laissez-faire with the terminology here, so take everything with a grain of salt.

Alternatively, if any of it resonated, I’m always down for a long walk or a private 3-6 person dinner to dive into the details. You can find my contact details here.

[2] As a quick bonus, the best definition for AI agents that I’ve found so far is from this SurgeAI post:

[…] A model can reliably use tools to achieve specific goals. The next step is whether it can break a task into meaningful goals and develop a multi-step plan to accomplish them. Models that can’t do this reliably aren’t agents; they’re chatbots with access to tools.