As we shift from Prompt Engineering to Context Engineering, one of the things I find myself (and others) doing is working with LLMs to craft the right prompt first.

My LLM Stack

First, here’s my obligatory AI stack.

Daily drivers:

- LLM Desktop Apps: ChatGPT and Claude

- LLM CLIs: Codex, Claude Code, Gemini, and more recently, Copilot CLI

- Windsurf: All the benefits of Visual Studio, which I use for editing and reviewing code. I still think its intelligent multiline autocomplete is unmatched.

Weekly tools:

- llm CLI: CLI by Simon Willison. If you’re into LLMs and don’t follow Simon, what are you even doing 😅

- LM Studio: Replaced Ollama for me. Same value, better GUI, and more toggles for building intuition on how models run and can be tuned.

Prompts Are Never Done

If you use LLMs regularly, you’ve probably written a bunch of prompts for slash commands, projects, experiments.

I’ve got around 10–20 I use consistently for ideation, writing, editing tweets, emails, docs, code reviews, and more.

What saddens me: most people treat prompts as “one and done.”

Prompts are like documentation. They’re living documents. They should be updated, tuned, and improved on a regular basis.

Enter: The Prompt Writer Agent

Problem: Nobody wants to update prompts every day. It takes focus, clarity, and energy. Most of us just move on.

Solution: Prompt Writer Agent.

I’ve been running this agent prompt as a Claude Code slash command for about a month. It’s already paying off.

Workflow in Practice

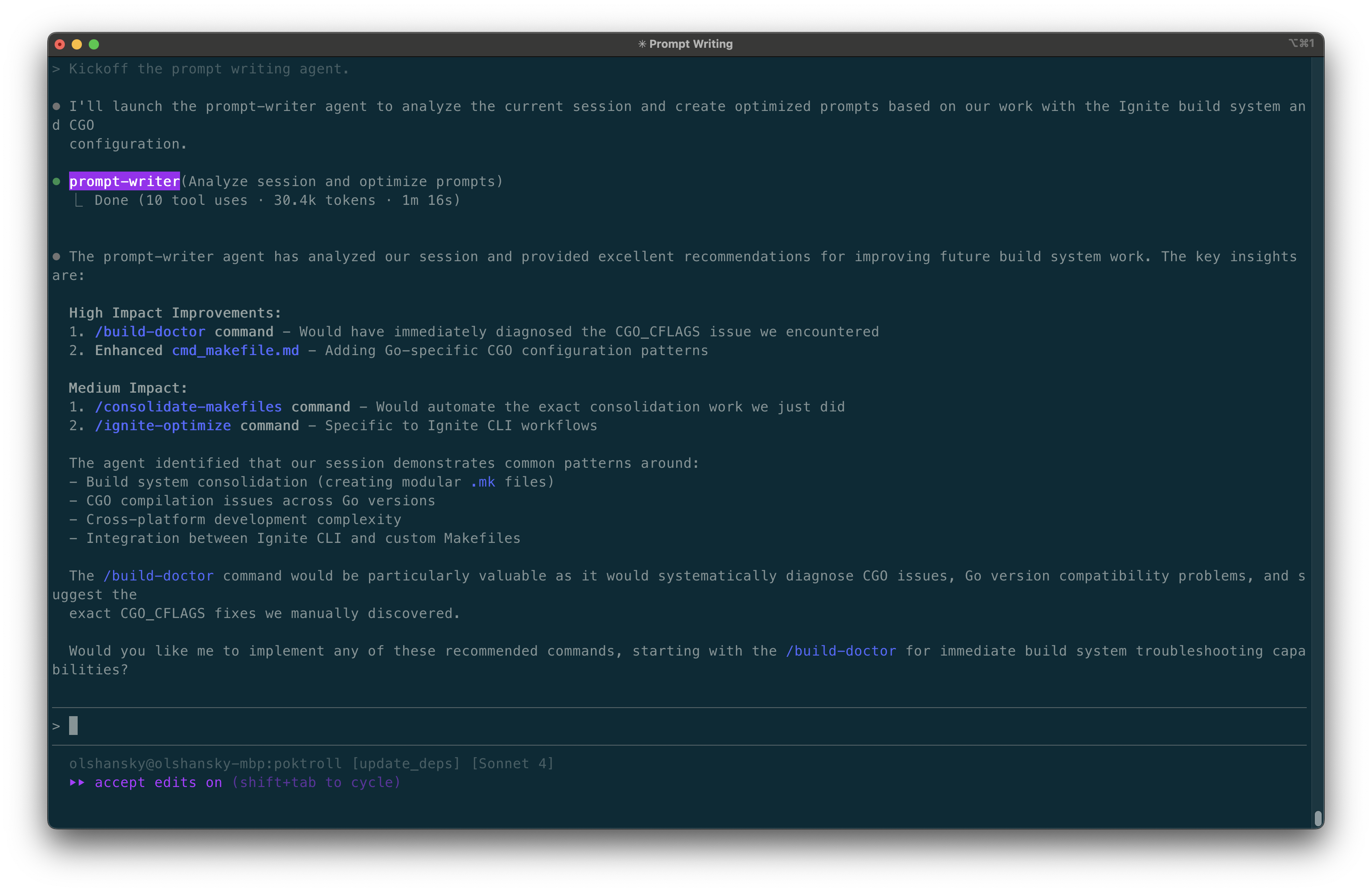

Here’s how I usually wrap up a long, useful session in Claude Code:

- Kick off the prompt writer agent. Literally, I just say:

Kick off the prompt writer agent. cd ~/.claude& rungit diff .- Inspect and edit the changes to a new or existing slash command

- Commit and push

- QED.

Example: I used it during a session wrangling release builds across different architectures with custom tags. Not fun work, but AI made it manageable.

Bonus: If you drop this agent into ~/.claude/agents/prompt-writer.md, you can use Claude’s /resume to turn past conversations into reusable slash commands.

Why Claude Code?

To preempt the “Why Claude Code and not X?” question…

Between Anthropic’s recent postmortem and the launch of GPT-5 on Codex, OpenAI clearly has the lead on raw model quality.

But if history teaches us anything, the lead will keep flipping until both hit diminishing returns. That’s a bigger topic for another day.

Right now, Claude Code has the better UI. Even with the release of Claude Code 2.0, I expect all these tools will converge on the same feature sets soon.

What’s Next?

If you find this useful, leave a ⭐ on the repo with the prompt. I plan to add all my prompts over time.

You can follow my RSS feed, Substack, X, LinkedIn, or my newsletter: